5.4.3 Management of risk from enteric pathogens

Waterborne disease from enteric pathogens can be considered in terms of outbreaks and endemic disease. Outbreaks are typically caused by acute incidents or failures that result in short term exposure to elevated concentrations of pathogens. Implementation of the Framework for Management of Drinking Water Quality will prevent the occurrence of drinking water outbreaks.

Endemic disease refers to the relatively constant background level of illness in the community. The extent to which drinking water quality may impact this background illness is not well understood. However, contaminated source waters with inadequate treatment are likely contributors (see section 5.3). The approach adopted in the Guidelines to manage risk from enteric pathogens ensures that drinking water treatment is targeted to the magnitude of contamination in the source water. That is, source water from heavily impacted catchments will require a higher level of treatment in comparison to source water from protected catchments in order to achieve safe drinking water. Specifically, the approach involves the following stages:

Defining a risk-based benchmark of safety

Assessing the level of contamination and assigning a source water category

Assessing treatment need based on source water category

Ensuring the treatment need is met.

Benchmark of safety: Microbial safety and the water safety continuum

There is no such thing as zero risk—when quantifying microbial safety within a risk framework it is necessary to define a level that is considered to be tolerable or safe. The definition of microbial safety used in these Guidelines for drinking water is a health outcome target of 1 x 10−6 Disability Adjusted Life Years (DALY or 1 µDALY) per person per year (pppy) (as discussed in Appendix 3). It is the same health outcome target adopted by the Australian Guidelines for Water Recycling (2006, 2008, 2009, 2018), WHO (2017) and Health Canada (2019).

The microbial health outcome target of 1 x 10−6 DALY pppy should be applied as an operational benchmark rather than a pass/fail guideline value (Walker 2016). It should not be used as a measure of regulatory compliance. This benchmark serves two important purposes:

setting a definitive target for defining microbially-safe drinking water

informing improvement programs to enhance safety of drinking water as per element 12 of the Framework for Management of Drinking Water Quality.

Immediate compliance of all drinking water supplies with the health outcome target of 1 x 10−6 DALY pppy is not expected (see Box 5.1). Application of the target is expected to follow a similar pattern described in Section 2.5 of the Framework Overview. It is acknowledged that it will be more challenging for some drinking water supplies to meet this target, particularly those in rural and remote areas. What is important is getting started towards meeting the target. In the meantime, decisions about the existing safety of drinking water supplies will be a matter for drinking water utilities in consultation with the relevant health authority or drinking water regulator.

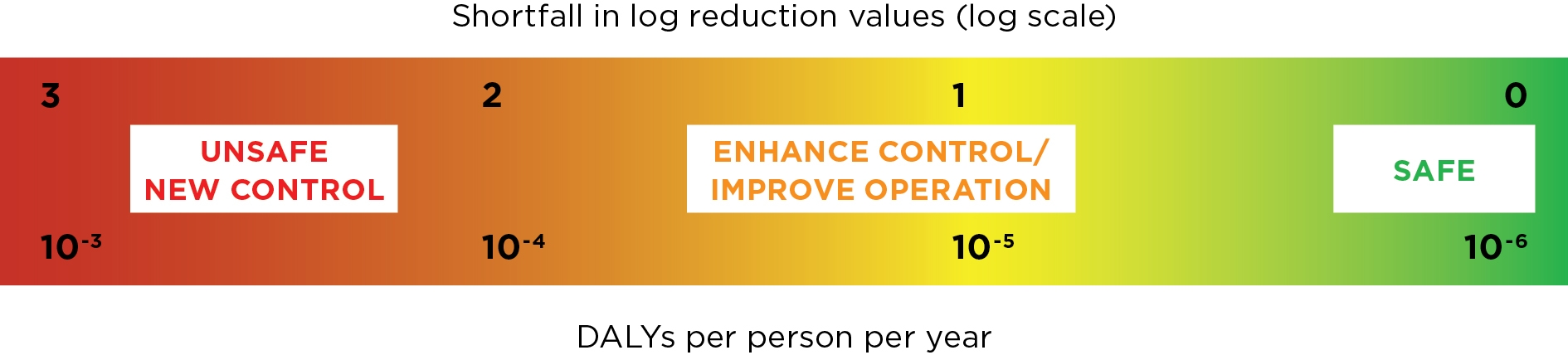

Figure 5.2 illustrates that water safety is considered along a continuum. A water safety continuum approach forms the basis for the development of short-, medium- and long-term water quality improvement programs. This approach incorporates a level of flexibility in dealing with short-term shortfalls in meeting the target (expressed as LRVs). The water safety continuum (Walker 2016) works on a graduated traffic light colour scale. The greater the shortfall between the estimated risk associated with the water supply and the 1 × 10−6 DALY (1 µDALY) pppy target, the more urgent and significant the action required to move the supply towards the benchmark value (Box 5.3).

Figure 5.2 Water safety continuum for drinking water supplies

Water Safety Continuum: achieving the health-based target

If a drinking water supply achieves:

10⁻⁶ DALYs pppy (1 µDALY pppy) or less: treatment barriers are adequate to produce safe water. Supplies that meet or exceed the benchmark are also likely to have sufficient treatment barriers in place such that short-term changes in raw water quality (e.g. short-term increases in source water challenge) will not pose a significant risk to consumers.

10⁻⁶ – 10⁻⁵ DALYs pppy: the existing treatment barriers are nearly meeting the target. While improvement is required, these improvements might be relatively minor (e.g. improving source water protection or optimising the performance of existing barriers) rather than major capital works.

10⁻⁵ – 10⁻⁴ DALYs pppy: the existing treatment barriers are not close to meeting the target. Additional source water protection or treatment is required.

greater than 10⁻⁴ DALYs pppy: the existing treatment barriers are not close to meeting the target. Major improvements are needed. The water supply risks may be approaching levels that could trigger a waterborne disease outbreak. Immediate action should be discussed with the relevant health authority or drinking water regulator.

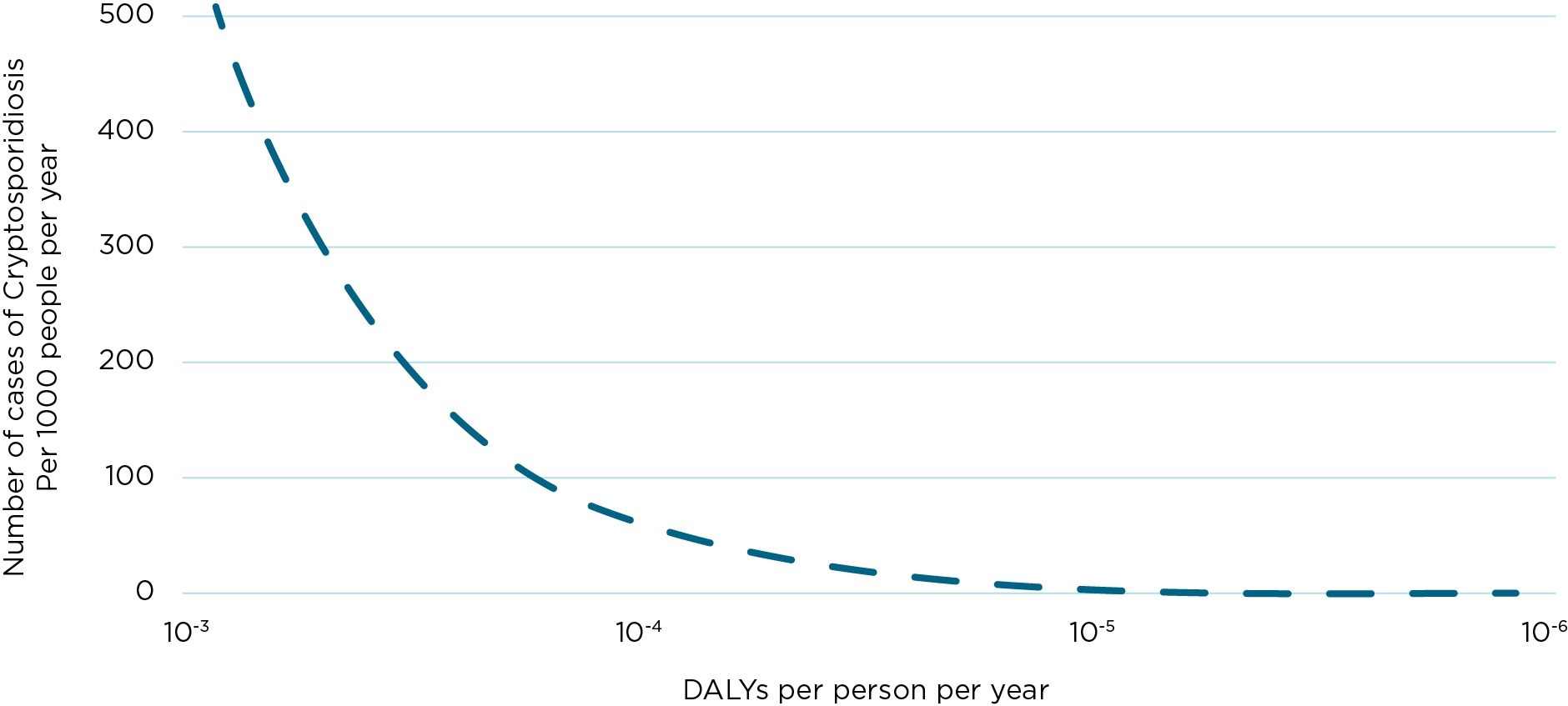

Figure 5.3 represents the theoretical decline in the number of illnesses per 1,000 people per year attributed to Cryptosporidium spp. as the quality of the water supply moves towards the health outcome target. It is noted that greater gains in reducing cases of disease are achieved by reducing the risk from 10−3 to 10−4 in comparison to moving from 10−5 to 10−6. Therefore, overall incremental improvement in water quality should ensure that improvements are prioritised for the riskiest supplies.

Figure 5.3 The representation of the theoretical decline in the number of cases per 1000 people per year attributed to Cryptosporidium

Source water category

To categorise source water, the level of contamination from enteric pathogens should be evaluated using the following steps:

Assess the vulnerability of the source water to determine the Class (see Table 5.2)

Assess and allocate a microbial band using raw water E. coli data (see Table 5.3)

Assess the vulnerability classification and microbial band allocation to characterise the source water into an appropriate category (see Table 5.4).

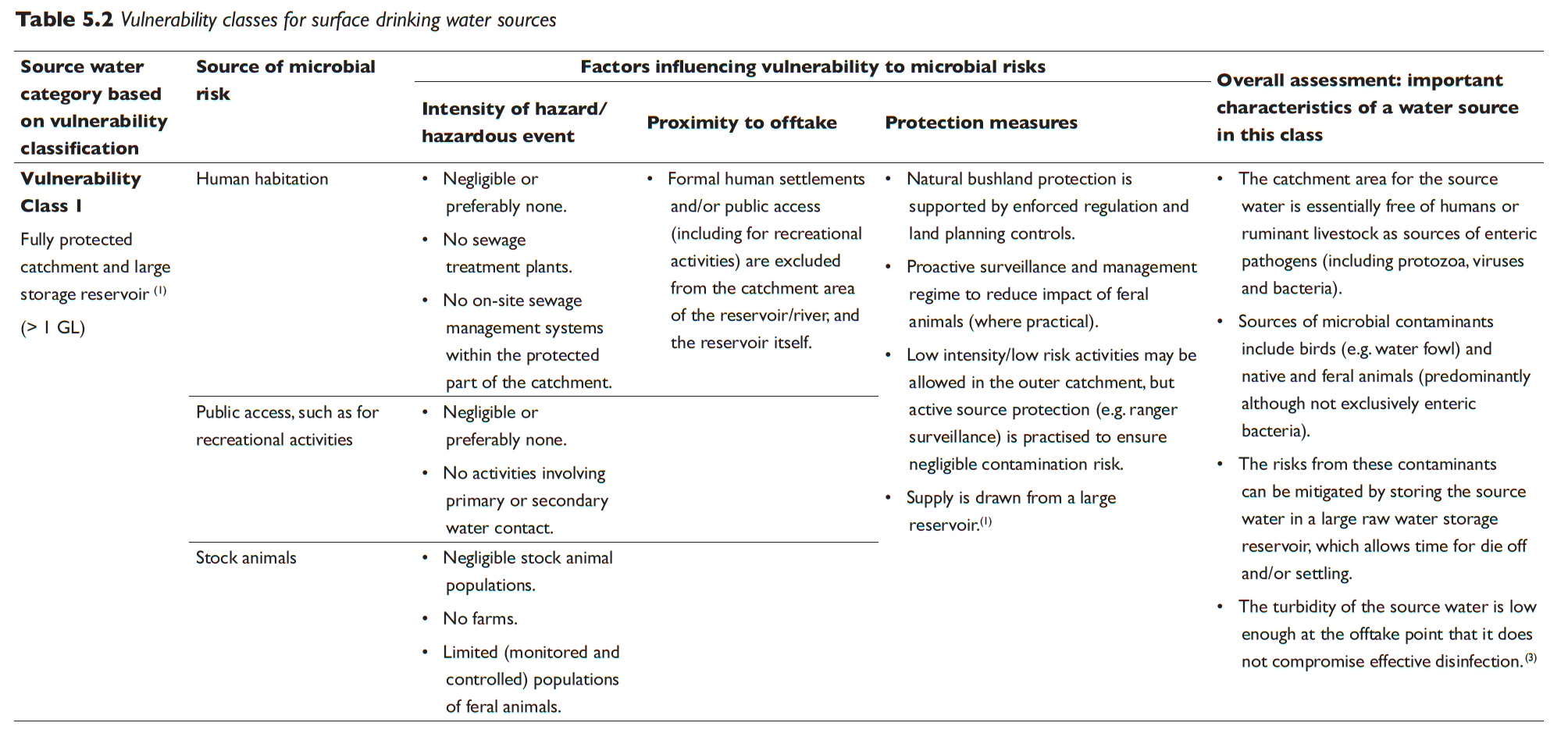

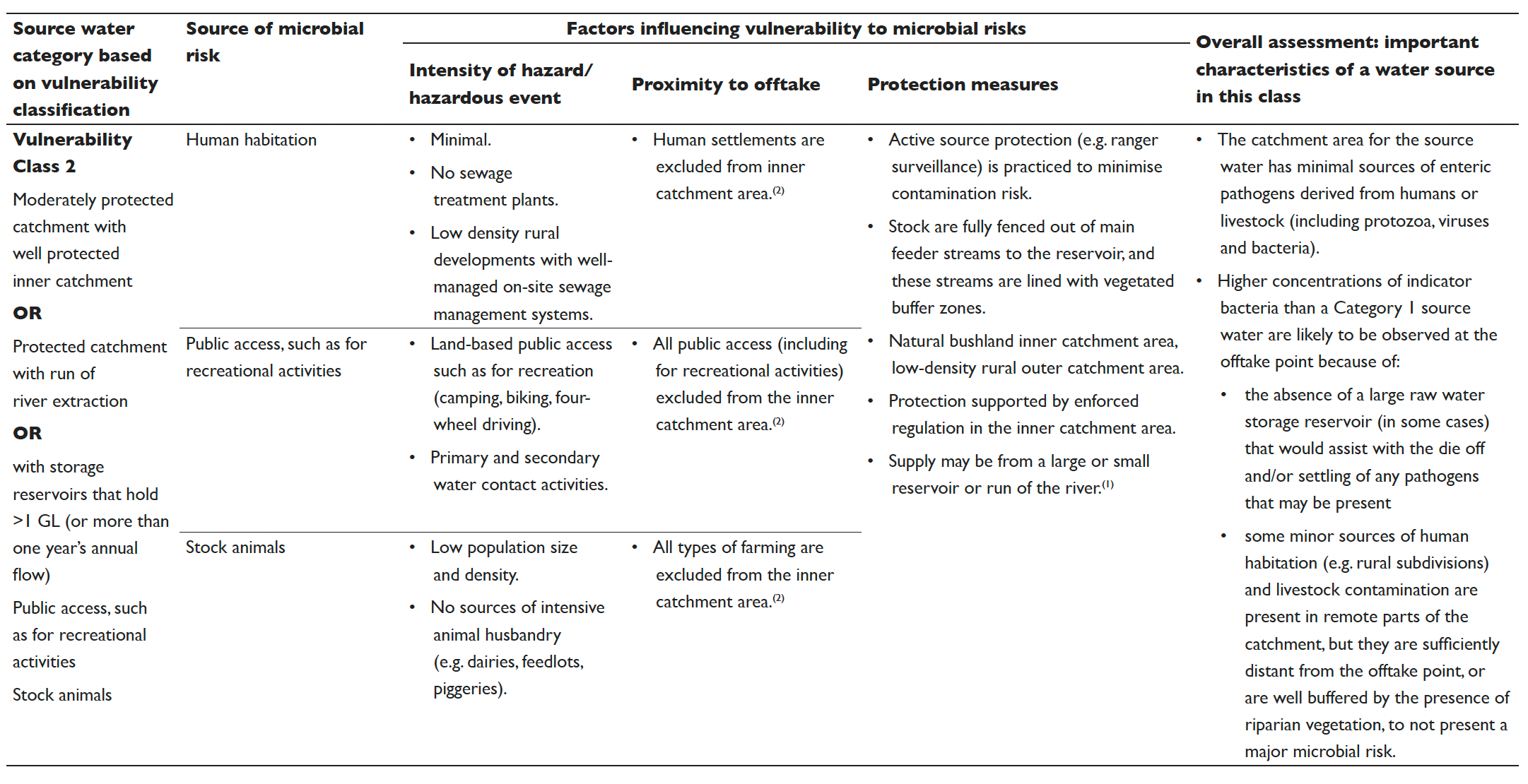

Vulnerability classification

A vulnerability classification consists of identifying sources of (and barriers to) enteric pathogen contamination within the drinking water supply catchment(s). Note that pumping and transfers of water means that the “catchment” might extend beyond the natural catchment boundary of the water supply intake. Additionally, multiple catchments may be blended and supply water to one point. All relevant catchments need to be considered in the vulnerability classification.

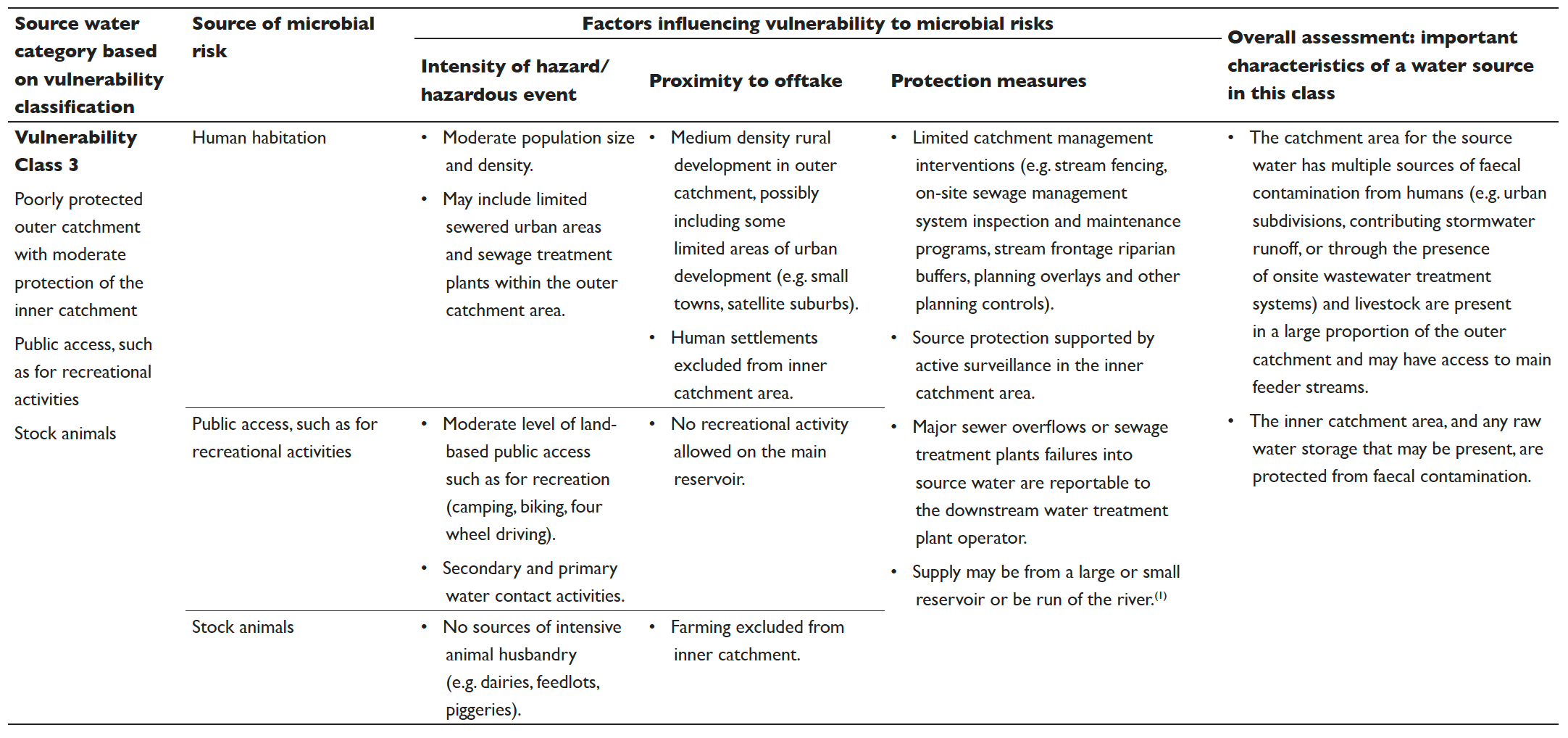

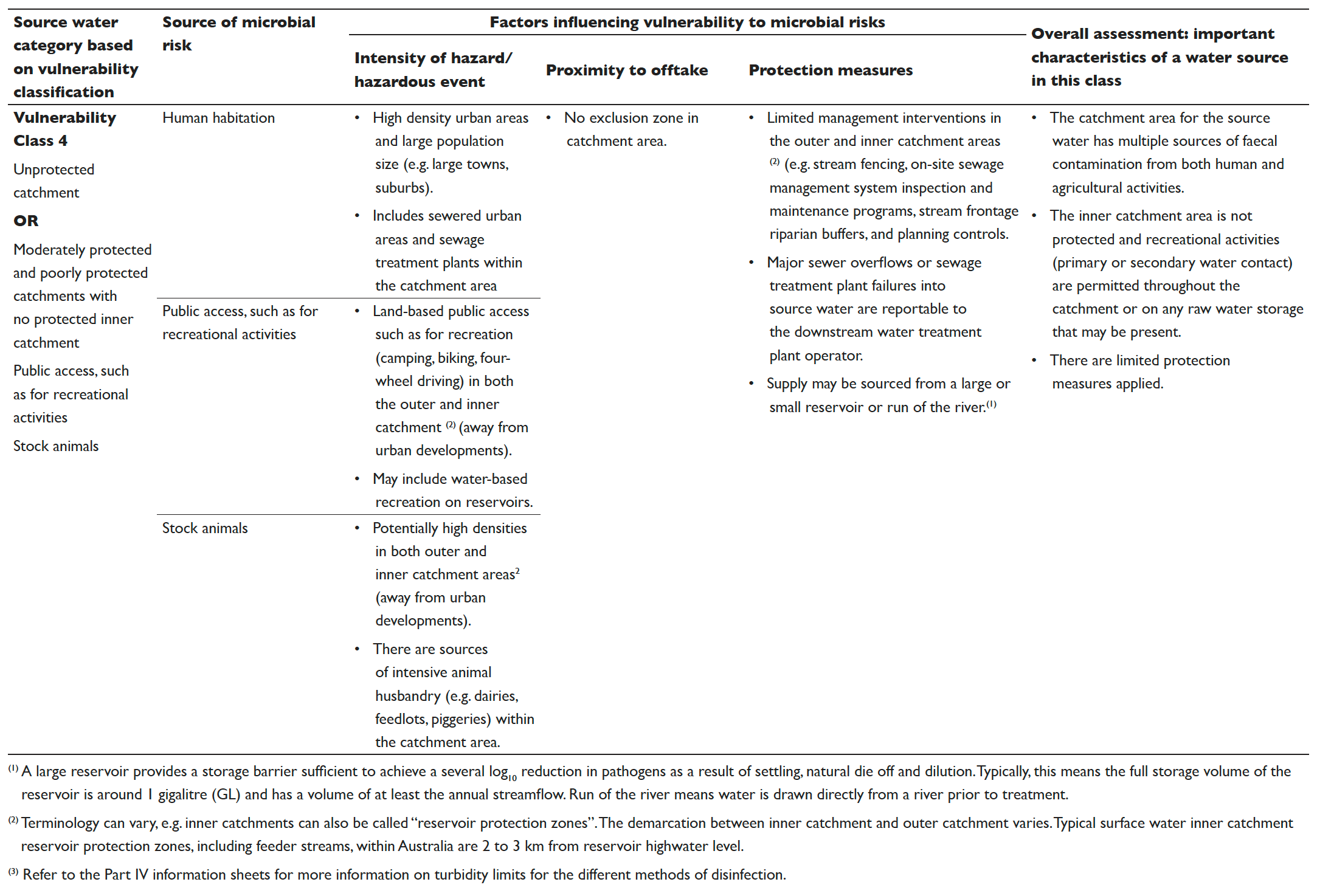

The first step of assessing the vulnerability classification of a catchment is to conduct a risk assessment to match the catchment to one of the source water category descriptions outlined in Table 5.2. A sanitary survey is a good way to confirm the assumptions of a vulnerability classification. To ensure the vulnerability classification remains accurate, sanitary surveys should be undertaken on regular basis or after significant changes to the catchment.

Table 5.2 Vulnerability classes for surface drinking water sources

The results from the vulnerability classification are used to allocate the catchment into one of four source water vulnerability classes based on the criteria detailed in Table 5.2. The vulnerability classification process can also be used to identify protection measures that can lead to improvements in the vulnerability classification.

While Table 5.2 has been developed to assess surface water sources, the criteria can also be applied to the vulnerability classification of groundwater sources. Classification based on the assessment of recharging surface water is an appropriate starting point for evaluation (see Box 5.2) until the groundwater security or the aquifer’s ability to reduce pathogen concentrations can be clearly demonstrated. An evaluation of groundwater security must be based on credible scientific evidence to validate performance and inform the appropriate treatment requirements. This evidence should be discussed with the relevant health authority or drinking water regulator.

If groundwater security cannot be assured, the groundwater should be conservatively classified to be consistent with the vulnerability classification of the recharging surface water. This could result in the groundwater being assessed as high as a Category 4 in the overall source water assessment. Further details on groundwater assessment are provided in Chapter Nine of the WaterRA Good Practice Guide to Sanitary Surveys and Operational Monitoring to Support the Assessment and Management of Drinking Water Catchments (WaterRA 2021).

The information contained in Table 5.2 is intended to be a guide only and can be used with flexibility. There is some variability between catchments. However, most surface drinking water sources can be assigned a vulnerability class using Table 5.2. The classification system includes consideration of three key types of hazards/hazardous events (human habitation, public access and stock animals). The conditions of all three considerations need to be taken into account when assigning vulnerability classes. Other factors such as sensitivity to drought, bushfires, floods and compounding factors such as terrain (e.g. slope and ease of access) should also be considered on a system by system basis. Advice can be sought from the relevant health authority or drinking water regulator in instances of uncertainty or where evidence exists to justify a departure from this guidance.

Microbial band allocation (E. coli monitoring)

A microbial band allocation is used to provide a measure of the overall level of faecal contamination of the source water using the microbial indicator bacteria E. coli. The process of microbial band allocation is used to support a vulnerability classification. It can also be used to confirm or challenge a previous source water vulnerability classification. The microbial band allocation can pick up contamination that may not be easily identifiable through the vulnerability classification process.

To assist in the allocation process, three microbial bands have been defined by measured E. coli concentrations and are outlined in Table 5.3. These bands rely on the data originally published in the second edition of the WHO Guidelines for Drinking Water Quality (1996). They have been trialled for their suitability for Australian conditions by the Water Services Association of Australia (Walker et al. 2015).

Table 5.3 Summary of E. coli bands for source water intended for drinking water (adapted from WHO 1996 and Deere et al. 2014)

1

<20

Low

2

20 to 2,000

Moderate

3

>2,000 to 20,000

Heavy

⁽¹⁾ Maximum E. coli results from raw water representative of inlet to treatment plant should be used unless data set is robust enough to use 95ᵗʰ percentile.

Source water that regularly returns E. coli concentrations >20,000 organisms per 100 mL (i.e. above the limit for E. coli band 3) should be reconsidered as a suitable source for drinking water supplies. Source water containing such high levels of E. coli is likely to be highly contaminated with faecal waste and associated enteric pathogens. If no alternative source exists consultation should occur with the relevant health authority or drinking water regulator to determine appropriate treatment requirements.

The following issues should be considered during E. coli monitoring and microbial band allocation:

Appropriate selection of sampling site: Concentrations of E. coli should be measured at a raw water site that is representative of the inlet to the water treatment plant. This site should best represent the overall microbial challenge to the water treatment plant. The site should also be able to capture changes to water sources and support selective abstraction if implemented.

Appropriate sampling frequency: To accurately characterise the microbial risk posed by the source water, raw water should be sampled for E. coli frequently enough to capture short term variations. The data set should include variations such as those experienced during events such as heavy rainfall. An example monitoring program might include a minimum of monthly or weekly samples, with an increased frequency of sampling during events such as rain or flooding to capture event peaks. Catchment characteristics will also inform the minimum sampling frequency. For example, a catchment subject to rapid changes in flows may need more frequent monitoring than slow moving rivers. Raw water turbidity is also a useful predictor of microbial peaks during events (WHO 1996).

Minimum required datasets and appropriate selection of maximum E. coli: The suggested minimum monitoring period to characterise microbial risk is two years, which would provide at least 100 data points with weekly sampling. A longer monitoring period may be needed if no events, such as heavy rainfall, occur or are captured by monitoring during the initial two-year period. Given the wide fluctuations that can occur with microbial concentrations in surface water, the peak concentrations should not be disregarded as outliers. The maximum E. coli result should be used for the allocation of a microbial band (Walker et al. 2015) unless the data set is robust enough to use the 95th percentile. The most appropriate E. coli value for band allocation should be selected in discussion with the relevant health authority or drinking water regulator. Samples from event-based monitoring (e.g. during heavy rainfall) should be included in the dataset, since omitting such events could underestimate the risk. At the same time, if source controls such as selective abstraction or alternate sources are used, the inclusion of event samples may overestimate the risk. The exclusion of event samples should only be considered in discussion with the relevant health authority or drinking water regulator when setting a source category. Based on the E. coli data interrogated in the dataset, the maximum value should be used to allocate a microbial band in accordance with Table 5.3.

Interim approach in the absence of data: There may be instances where there is not enough data to confidently allocate a microbial band, but the source water still needs to be categorised. The source water category will need to be based on the vulnerability classification alone until sufficient raw water E. coli monitoring is undertaken. A conservative E. coli band allocation is recommended while sufficient monitoring data is collected. This may place small water suppliers into the most conservative category due to a lack of data (see Box 5.4). Historical thermotolerant coliform data (which may have previously been referred to as faecal bacteria indicators) may be used in the short term as a substitute for E. coli data until sufficient E. coli monitoring data is obtained. Advice should be sought from the relevant health authority or drinking water regulator.

Small water suppliers

Small water suppliers (e.g. community-based systems supplying fewer than 1000 people) are well placed to undertake a vulnerability classification. This provides a useful starting point for assessing the safety of source waters and prioritising actions for improvement. Source water categories are defined in Table 5.2.

Small water suppliers may have very limited data and resources upon which to base their source water categorisation. However, small water suppliers still need to understand catchment contamination to determine the appropriate level of treatment. Where no E. coli monitoring data are available, water sources should be allocated a conservative default category (e.g. Category 4) pending the collection of sufficient data to justify otherwise. The suitability of allocating a less conservative category (Category 3 or lower) should be discussed with the relevant health authority or drinking water regulator.

While this may be a challenge, there is an important longer-term incentive to improve system understanding through microbial faecal indicator (E. coli) testing. Better understanding of microbial contamination of source waters through monitoring (detailed in Section 10.2.2) allows for better decision-making. A more accurate dataset will reduce reliance on conservative assumptions about enteric pathogen risks in source waters when assessing treatment requirements.

Assessment of source water category

The source water category should be determined by assessing the vulnerability classification with the result of the microbial band allocation. The possible outcomes of this categorisation process are outlined in Table 5.4.

Table 5.4 Source water category based on comparison of E. coli concentration with vulnerability classification

Vulnerability class 1

Category 1⁽²⁾

Category 2⁽³⁾

Requires further investigation⁽⁴⁾

Vulnerability class 2

Category 2⁽³⁾

Category 2⁽²⁾

Requires further investigation⁽⁴⁾

Vulnerability class 3

Requires further investigation⁽⁴⁾

Category 3⁽²⁾

Category 4⁽³⁾

Vulnerability class 4

Requires further investigation⁽⁴⁾

Category 4⁽³⁾

Category 4⁽²⁾

(1) Maximum E. coli results from raw water representative of inlet to treatment plant should be used unless data set is robust enough to use 95ᵗʰ percentile.

Combining the results of the E. coli data and vulnerability classification will result in one of the following outcomes:

(2) The two assessments are consistent and support each other.

(3) The result is feasible, but has a lower degree of confidence. Both the E. coli data and vulnerability classification should be re-examined to better understand the reasons for the misalignment. For example, if the E. coli results indicates a higher level of microbial risk than inferred by the vulnerability classification, then the vulnerability classification of the catchment should be repeated to determine if there are sources of microbial risk that were not previously identified. If the E. coli results indicates a lower level of microbial risk than inferred by the vulnerability classification, the suitability of a less conservative category should be discussed with the relevant health authority or drinking water regulator.

(4) This result requires further investigation. The results should be critically reviewed to understand the discrepancy. In the interim, the most conservative source water class option under consideration should be adopted. These results should be discussed with the relevant health authority or drinking water regulator.

Maximum E. coli concentrations of 2,000 to 20,000 per 100 mL, with a recorded vulnerability class of 1 or 2, requires further discussion and/or investigation including consideration of environmental E. coli (see Box 5.5).

Environmental E. coli

Environmental E. coli strains are genetically different from E. coli that comes from human and animal sources (Sinclair 2019). Under certain conditions, environmental strains of E. coli can multiply into blooms within surface waters. These blooms have been observed in several Australian drinking water reservoirs, although they are uncommon. A mismatch between the microbial monitoring band allocation and vulnerability classification may occur if environmental E. coli blooms occur in the surface water source.

Where E. coli blooms are suspected, before ruling out faecal sources it is important to obtain evidence that high numbers of E. coli are environmental in origin and not associated with faecal inputs to surface source waters.

Bloom strains can be identified through several methods (Sinclair 2019). These include:

the production of mucoid proteins when cultured

atypical biochemical test results

serotyping

detection of capsid proteins.

Simultaneous monitoring for Enterococci and E. coli may assist in strengthening the evidence of a non-faecal (environmental) source (i.e. low numbers of enterococci as compared with E. coli).

If evidence demonstrates an environmental source of E. coli (such as a bloom), then these results may be omitted from the dataset used to set the microbial monitoring band. This should only be done in consultation with the relevant health authority or drinking water regulator. Blooms are very uncommon events—the discounting of results should only be considered when the evidence for their occurrence is clear. Even in the confirmed presence of an environmental E. coli bloom, it is likely that E. coli that are faecal in origin will also be present at lower concentrations. This could present a health risk.

Further details on the management of environmental E. coli is available in Sinclair (2019).

Source water protection

Catchment management practices that prevent and minimise the contamination of source waters is the first step in risk management.

There are limited options for catchment management for water supplies that take water from large rivers or unprotected catchments. In these cases, water suppliers should seek to improve conditions in sensitive areas of the catchment (such as land close to the offtake) and prevent further degradation of the outer catchment.

Effective catchment management and source water protection practices should provide the potential for the source vulnerability classification to be reduced. These strategies are outlined in Section 3.3.1 and Table A1.7 and may include:

preventing access of livestock to waterways

(re-)establishing vegetation alongside the water source (riparian zones)

developing waste management plans for intensive livestock facilities (this should include the reporting of waste management failures that may influence downstream water supply intakes)

managing onsite sewage treatment systems (including failure detection and reporting)

upgrading of sewage treatment facilities

adhering to guidelines for the safe application of biosolids

reporting and responding to failure of sewage treatment facilities that may influence downstream water supply intakes.

Reservoirs can provide a valuable barrier for pathogen inactivation and removal. They can contribute to meeting the treatment targets (expressed as LRVs), provided the performance of the barrier is validated. Table A1.8 provides more detail on LRVs. The performance of reservoirs for the removal of pathogens is site-specific and should be evaluated for a range of conditions (see Box 5.6).

Reservoirs and risk management: Understanding and managing reservoirs for control of pathogen risk (and LRVs)

Reservoirs are a valuable barrier for pathogen removal/inactivation and can contribute to the overall treatment target (expressed as LRVs) of a treatment train. When contributing to the LRV, it is essential that the pathogen removal performance of the reservoir be quantitatively evaluated for all relevant conditions. This will involve a site-specific evaluation of the fate and transport of pathogens in the reservoir.

Researchers have investigated the way in which pathogens move through reservoirs (Brookes et al. 2004). Some pathogens are settled by gravity if water is static. They may also be inactivated by temperature, sunlight and predation at varying rates. Pathogen risk can be reduced if water is stored for long enough. If waters are very clear and shallow, factors such as temperature and sunlight can also influence how much pathogens are reduced. This is particularly the case for organisms which are sensitive to UV light, such as Cryptosporidium.

Under relatively low flow conditions, reservoirs can provide good removal when pathogen ingress occurs some distance from the offtake. However, in practice reservoir storage barriers can be compromised by:

waterfowl perching on offtake infrastructure

public access, such as recreation close to water supply offtakes

short circuiting through a combination of hydraulic forcing and temperature/density-related buoyancy especially following rain events

resuspension of sediments containing pathogens that are able to survive in the environment following rain-events and rapid inflows into reservoirs.

To be confident in the ability of a reservoir to contribute to the LRV (through processes such as dilution, attenuation and settling) the following factors need to be understood:

the hydrodynamics of the reservoir

sources of direct pathogen ingress (e.g. from public access, including recreation if allowed or frequently observed)

the dynamics of water inflow (particularly high-volume inflow events such as floods or high flow managed releases).

Risk management preventive measures that rely upon reservoir storage should assess the impacts of these variables. In particular, ingress close to offtakes and short-circuiting from more distant inflows should be carefully evaluated. Appropriate controls should be included to reduce ingress of pathogens and increase retention time. Controls should also aim to promote reductions of concentrations present in feeder streams. Possible controls include:

restricting or excluding public access (including recreational access)

turbidity monitoring

water extraction management.

See Section 3.3.1 for more information on preventative measures and multiple barriers.

Assessment of treatment requirements

The level of treatment required to achieve safe water (e.g. the number and types of barriers) is dependent on the level of contamination. It should also include a margin for uncertainty. This section aims to provide guidance on recommended treatment targets for each of the four source water categories described in Table 5.4. The required treatment targets, expressed as LRVs, for each pathogen group (bacteria, viruses and protozoa) are summarised in Table 5.5.

The basis for the derivation of the LRVs in Table 5.5 is explained in detail in Appendix 3. WHO recommends using the QMRA framework to estimate the required level of treatment to achieve a health outcome target of 10−6 DALYs pppy (WHO 2011a; WHO 2016). This is consistent with the approach used for the Australian Guidelines for Water Recycling (NRMMC, EPHC and AHMC 2006; NRMMC, EPHC and NHMRC 2008).

Assumptions based on a review of the best available scientific evidence relevant to the Australian context were made to support implementation (see Appendix 3). Assessing pathogen LRV requirements and the LRV achieved by any treatment process are uncertain. Therefore, the LRVs included in Table 5.5 are rounded to the nearest 0.5 log and represent the upper end of ranges shown in Table A3.8. Additionally, due to the observed overestimate of infective oocysts with standard methods for Cryptosporidium in Australian catchments, the LRVs for protozoa in Table 5.5 have been reduced by 1 log10 for Category 2 to Category 4 catchments. Source specific data for reference pathogens can be used to determine treatment requirements in consultation with the relevant health authority or drinking water regulator (Box 5.7). Appendix A3.11 provides more information. Information on LRV requirements and the LRV achieved by any treatment process will be revised and updated when more published data become available for consideration.

Table 5.5 Treatment targets for protozoa, bacteria and viruses given the source water type and E. coli results

Category 1

Surface water or groundwater under the influence of surface water, which is fully protected.

or

Secure groundwater

<20

(E. coli band 1)

0

0⁽³⁾

4.0

Category 2

Surface water, or groundwater under the influence of surface water with moderate levels of protection

20 to 2,000

(E. coli band 2)⁽⁴⁾

3.0

4.0

4.0

Category 3

Surface water, or groundwater under the influence of surface water with poor levels of protection

20 to 2,000

(E. coli band 2)⁽⁴⁾

4.0

5.0

5.0

Category 4

Unprotected surface water or groundwater under the influence of surface water that is unprotected

>2,000 to 20,000

(E. coli band 3)

5.0

6.0

6.0

(1) Maximum E. coli results from raw water representative of inlet to treatment plant should be used unless data set is robust enough to use 95th percentile.

(2) Note that these values are based on estimation from first principles using QMRA with the details of their derivation summarised in Appendix 3 and the evidence cited in that Appendix.

(3) Fully protected Category 1 source waters are characterised as having negligible or no human access. An LRV target of zero for viruses is set as humans are the predominant source for enteric viruses.

(4) Maximum E. coli results for raw water monitoring for source water Categories 2 and 3 are within E. coli band 2. Distinguishing between Categories 2 and 3 is confirmed based on the results of the vulnerability classification.

If the water supplier considers the source water categorisation and required LRV to be too high and unnecessarily conservative for a specific site, it must be discussed with the relevant health authority or drinking water regulator. A more detailed site-specific assessment may be required including application of QMRA with direct analysis of pathogen data (Box 5.7). The relevant health authority or drinking water regulator is responsible for deciding whether a lower category is sufficient to achieve safety.

Enteric Pathogen data

Direct measurement (or enumeration) of enteric pathogens from surface water samples is a valuable tool to support “knowing your system”. However, testing for enteric pathogens is not required for routine classification of systems in these Guidelines because of:

uncertainties associated with interpretation of the analytical results

the high cost of sample analysis.

Where pathogen testing can be undertaken or pathogen data is available, it is strongly recommended that these data be considered. It is important to ensure that:

the pathogen data is considered alongside the indicator data and vulnerability classification to ensure that the overall assessment is consistent. For example, a small number of samples in which the pathogens of interest are not detected does not negate long term high indicator counts and/or a highly impacted catchment.

the analytical methods that were applied to generate the results are understood and the health implications of the result are clear. For example, did the test target only human-infectious pathogens or organisms belonging to a broader group? Did the test only target pathogens still capable of causing disease or did it target all pathogens (both “alive” and “dead”)?

the statistical methods applied to estimate the mean and upper bound concentration are considered alongside the nature of the data. Were the data direct counts, presence/absence results or categorical most probable numbers (MPNs)? What was the sample volume? Was the sample correct for the recovery (performance) of the method?

the dataset is sufficiently robust to account for the range of conditions that might be experienced. It should cover the range of flood and drought cycles, as well as extreme peak and failure mode events. It may take hundreds of samples and many years to obtain such a robust dataset.

Further practical details can be found in the industry implementation documents, such as those listed in Box 5.1.

Methods used to obtain and analyse pathogen data should be reported clearly when submitted to the relevant health authority or drinking water regulator. Further information on criteria for reporting pathogen data (including data quality and methods) to be used in QMRA is provided in the WHO guidance document (WHO 2016).

Meeting the treatment requirements

The treatment target is expressed as a single required LRV for each pathogen group. The total log10 reduction (calculated by summing the log10 reduction credits of individual treatment or environmental barriers) must meet or exceed the LRV required in Table 5.5.

This does not replace the multiple barrier approach. Both principles should be maintained in the design and operation of the system. As a general principle a maximum of 4 log10 reduction is applied to individual treatment processes. Published design criteria for disinfection processes is typically limited to demonstrating a 4 log10 reduction. Furthermore, setting an upper limit of 4 log10 supports the adoption of multiple barriers by discouraging reliance on a single treatment process to achieve the required LRV.

Indicative LRVs for common treatment barriers are summarised in Table 5.6. While these LRVs are achievable they should not be adopted as default values.

Table 5.6 Indicative pathogen LRV potentially attributable to treatment barriers

The performance of an individual drinking water treatment process will vary depending on the specific plant design and operating conditions. LRVs for individual processes at specific sites need to be validated as summarised in Table 5.6 and described in Section 9.8. The purpose of validation is to demonstrate that claimed LRVs are achieved under defined operating conditions providing that operational monitoring targets (i.e. critical limits) are achieved (e.g. filtered water turbidity, transmitted UV light dose).

Site-specific validation does not typically mean challenge testing. Manufacturers of devices (e.g. UV light disinfection systems and membrane filters) often validate their devices before marketing them. In these cases, validation does not need to be repeated providing the operating conditions defined by the manufacturer are relevant to the site in question. For example, a manufacturer may specify that validation of a UV light disinfection system is only applicable for waters above a defined transmissivity (the percentage of UV light that passes through the water). For chlorination, the required residual chlorine concentration (C) and the corresponding disinfectant contact time (t) in minutes (C.t) to achieve the specified LRV depends on temperature and pH.

In other cases, such as conventional and direct media filtration, LRVs can be adopted from accepted industry norms (e.g. USEPA 2006) providing defined operating conditions and operational monitoring targets are achieved. This includes both operational monitoring of target criteria such as filter effluent turbidity, as well as the good practice operation of supporting processes (e.g. coagulation, mixing, flocculation, sedimentation or flotation, filter ripening, backwash processes, controls on waste recycling). Operational monitoring and compliance with target criteria and critical limits, underpinned by good operational practices and supporting programs, is the key to ensuring that pathogen removal is maintained as expected.

While the LRV of individual treatment barriers within a treatment train are typically summed to quantify the overall LRV, it is known that performance of individual barriers is often not independent. Poor performance on one barrier (e.g. coagulation) may affect another (e.g. filtration), further highlighting the need for close control of operating conditions. Where possible, assessment of sensitivities of the overall treatment train should be considered when developing management protocols including operational monitoring.

For most practical scenarios in Australia, the target treatment implementation may include:

Category 1: Single barrier only of disinfection or filtration. This would most commonly be chlorination, but could sometimes be chloramination, UV, ozone or filtration.

Category 2: Direct filtration (filtration without a sedimentation process between the coagulant dosing and filtration steps or membrane filtration) followed by a single disinfection barrier. This disinfection barrier would usually be chlorine but sometimes chloramination, UV or ozone.

Category 3: Full conventional filtration (filtration that has a sedimentation or a flotation process between the coagulant dosing and filtration steps) or membrane filtration following a sedimentation process, followed by a single disinfection barrier. The disinfection barrier would usually be chlorination but sometimes chloramination, UV or ozone.

Category 4: Filtration followed by two disinfection barriers. The disinfection barriers would usually be UV and chlorine disinfection but sometimes chloramination, ozone or an additional filtration barrier may be applied.

Other treatment options and combinations may be used providing they are validated to deliver the required LRVs. Residual disinfection should be maintained wherever possible to provide protection from backflow, ingress and help to inhibit biofilm growth.

In practical terms, when assigning categories to source waters the primary objective is to identify whether Cryptosporidium may be present in sufficient concentrations that the treatment train requires more than one barrier (i.e. a filtration and/or UV barrier rather than only a chlorination barrier). Chlorination is typically ineffective for Cryptosporidium. At a minimum, a disinfection barrier (usually chlorination) is required for all source waters used for drinking water in Australia. Regardless of the category of source water, disinfection mitigates against microbial risks in distribution systems– both from enteric pathogens and opportunistic pathogens.

Management of the distribution network

Waterborne disease outbreaks can occur due to post treatment contamination. Health-based targets were introduced in the US in 1996. Around 90% of outbreaks since then are attributed to contamination within the distribution system.

Treated drinking water can become re-contaminated with enteric pathogens via water storage tanks and the distribution network. This can be due to:

ingress of contaminated material during periods of low pressure

negative pressure transients (e.g. due to water hammer)

backflow

cross connection

compromised hygiene during maintenance or repairs

contamination of water storages from birds or access by other vertebrates.

For details on the protection and maintenance of distribution systems see Chapter 3.3.1.

Further information on the management of safe distribution networks is available in a number of documents (Deere and Mosse 2016; Kirmeyer 2000; WHO 2014; Martel et al. 2006; Water Research Australia 2015).

Last updated